Η Google σύντομα θα επιτρέψει σε πολλούς απαγορευμένους δημιουργούς πίσω στο YouTube. The change affects channels removed for breaking past COVID-19 and election rules.

Google’s parent firm, Alphabet, shared the news on Tuesday in a letter to Congress. The letter follows a long probe by the House Judiciary Committee into tech Η λογοκρισία . It called this pressure “unacceptable and wrong.”

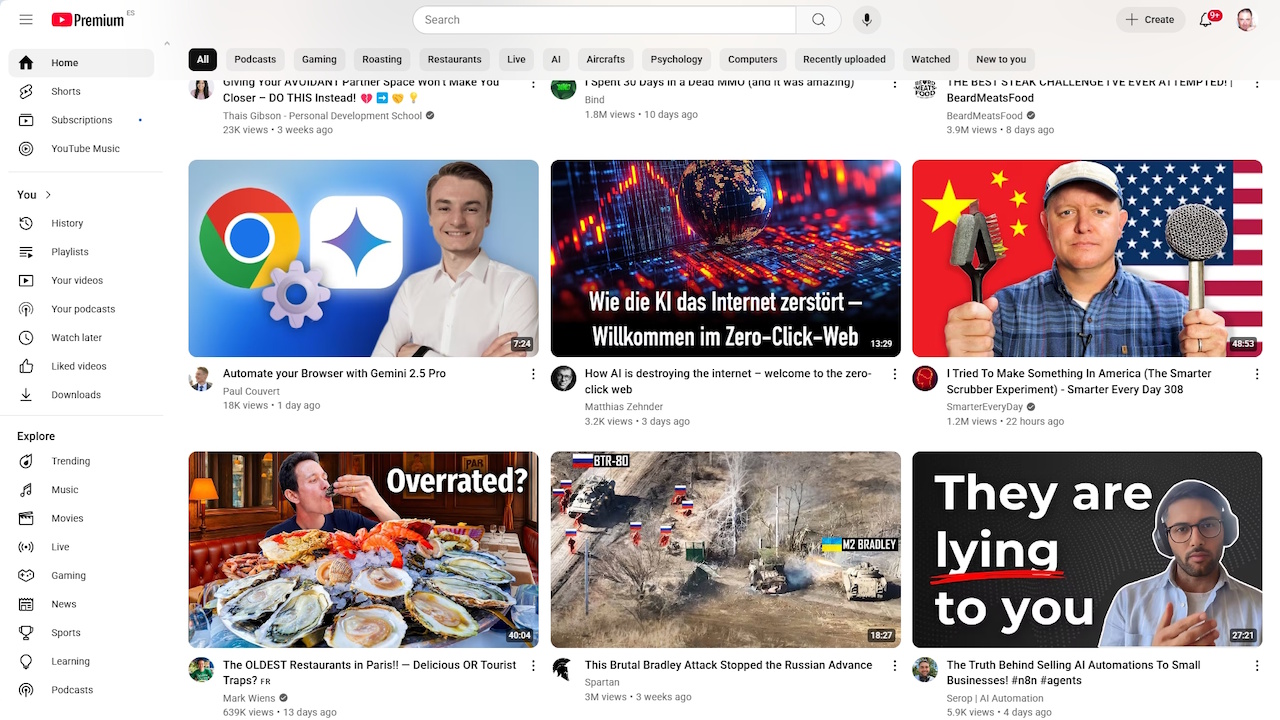

The new policy brings YouTube closer to other sites like Meta that have also eased their content rules. The move signals a major shift in how the world’s largest video platform handles controversial speech.

A Reversal Under Congressional Pressure

The policy change was revealed in a letter to House Judiciary Committee Chairman Jim Jordan.

The committee’s investigation pressured Google for months, issuing a subpoena in early 2025 . Alphabet’s letter states it will “provide an opportunity for all creators to rejoin the platform”for those previously terminated.

This could mean the return of high-profile conservative voices like Dan Bongino and Steve Bannon, who were banned for violating misinformation policies.

Chairman Jordan celebrated the development on X, stating, “This is another victory in the fight against censorship.”The reversal is a direct consequence of sustained political oversight.

Google’s letter also contained a stark admission about government influence. Alphabet lawyer Daniel Donovan wrote that senior Biden administration officials had pressed the company to remove content that did not violate its policies.

This mirrors claims made by Meta CEO Mark Zuckerberg in August 2024, who said, “I believe the government pressure was wrong, and I regret that we were not more outspoken about it.”

Scrapping COVID and Election Πολιτικές

Στο επίκεντρο της προσφοράς επανένταξης είναι το επίσημο τέλος συγκεκριμένων κανόνων περιεχομένου που οδήγησαν στις απαγορεύσεις. According to the company’s letter, YouTube had already retired its policy regarding the integrity of the 2020 U.S. election back in 2023.

By December 2024, it had also ended all its stand-alone COVID-19 misinformation policies, paving the way for this week’s announcement.

The retirement of the election integrity policy was particularly notable, as it was done to “allow for discussion of possible widespread fraud, errors, or glitches occurring in the 2020 and other past U.S. Presidential elections,”per a company statement.

This decision effectively dismantled the framework used to suspend many accounts during a contentious period.

This marks a significant departure from the platform’s aggressive stance during the pandemic.

In early 2020, YouTube demonetized all COVID related Περιεχόμενο σύμφωνα με την πολιτική του”ευαίσθητων γεγονότων”, η οποία σχεδιάστηκε για βραχυπρόθεσμα γεγονότα που αφορούν την απώλεια ζωής. The platform quickly found this approach unsustainable as the pandemic became a long-term global reality.

The company soon reversed course for news outlets and other creators. Then-CEO Susan Wojcicki noted at the time, “It’s becoming clear this issue is now an ongoing and important part of everyday conversation, and we want to make sure news organizations and creators can continue producing quality videos in a sustainable way.”

Now, the platform is moving even further away from direct moderation on these topics. In its letter, the company made a firm statement on fact-checking, asserting that it “has not and will not empower fact-checkers to take action on or label content.”

Instead, Google mentioned it is testing a feature similar to X’s Community Notes to add context to videos.

This public disavowal of third-party fact-checkers aligns with a broader industry retreat from such partnerships, signaling a fundamental shift in how major platforms view their role in verifying information.

A Broader Industry Shift From Content Policing

YouTube’s decision is not happening in a vacuum. It reflects a significant strategic pivot across Silicon Valley.

In June 2025 YouTube had already quietly relaxed its internal rules, allowing more hate speech and misinformation if the content was deemed in the ‘public interest’.

A spokesperson at the time said the goal was “to protect free expression on YouTube while mitigating egregious harm.”

This trend is ορατό σε μεγάλες πλατφόρμες. Meta has scaled back its fact-checking programs, and other companies are increasingly relying on AI for moderation, a move that has its own set of challenges.

This pivot toward more permissive standards in the U.S. clashes with regulatory pressure abroad, where the EU is preparing large fines for content moderation failures.

It also runs contrary to demands from safety advocates who have protested online βλάβες. Προς το παρόν, η τάση στο Big Tech είναι ξεκάθαρη: μια υποχώρηση από το ρόλο της αστυνομίας ομιλίας.